There are always going to be times when uploading multiple files to SharePoint will be needed. Mostly, I simply map the document library as a drive and copy the files that way. However, that option isn't always available. The prime examples are the Site Assets and Site Pages document libraries. They don't allow mapping. For those situations, I use the following code to upload the whole directory tree. It is using the Command Line Parser Library that I spoke of in an earlier post and the Microsoft SharePoint Client libraries. Here is the options file:

And here is the program file:

Enjoy! And keep coding!

This is my blog about coding in C#. C# is what I do, so C# is what I am going to talk about.

Tuesday, February 28, 2017

Saturday, February 25, 2017

Scrivener and Images

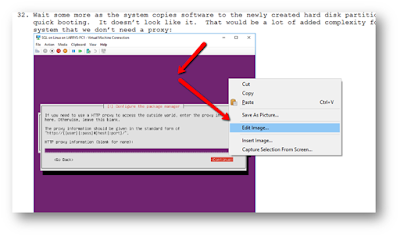

Hey all! A quick note on using Scrivener for doing Hands On Labs or other technical documents with tons of screen shots. I really like the way that it allows me to lay out my thoughts easily, but it isn't really built for dealing with tons of images. It isn't easy, but this tip can help make it a little less painful. Once you have pasted your screen shot into your document, right click on it and choose Edit Image... from the context menu:

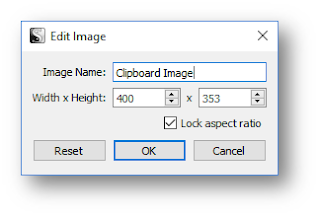

Once on the dialog box, set the width and name:

That way you can resize your images to the same width and keep them consistent within your document without having to try and eyeball the sizes. I will have to go back through my other HOL and update the images there.

Good coding!

|

| Context menu on an image in Scrivener |

|

| Edit Image dialog box |

Good coding!

Friday, February 24, 2017

Reading Structured Binary files in C#: Part 10

I am not happy with what I am doing, but it is the best that I can see clear to do. The dissection of the binary files has reached the SectionTable portion and here we run into the limitation of using a communication tool such as SpecFlow as a framework for testing. The ScenarioOutline in SpecFlow allows us to provide an Examples table, but the table is limited. I will need to introduce some logic into the steps that blur some lines that I generally want crispy.

The section table contains an arbitrary but known number of sections of indeterminate types. We 'know' that there are three, and will test for that. But how will we test the values? I will test that they are in the same order among the files, and will test each type separately. I will include multiple entries within a field as a comma delimited list and have the step split the list for comparison. Here is what it looks like testing one file when completed:

It isn't pretty, but it gets the job done. The step file for this test looks like this:

If you read this far, I hope you skipped a bunch. You see what I mean about ugly. If you know of a better way to accomplish this with SpecFlow, please speak up!

Keep your bits in line.

The section table contains an arbitrary but known number of sections of indeterminate types. We 'know' that there are three, and will test for that. But how will we test the values? I will test that they are in the same order among the files, and will test each type separately. I will include multiple entries within a field as a comma delimited list and have the step split the list for comparison. Here is what it looks like testing one file when completed:

It isn't pretty, but it gets the job done. The step file for this test looks like this:

If you read this far, I hope you skipped a bunch. You see what I mean about ugly. If you know of a better way to accomplish this with SpecFlow, please speak up!

Keep your bits in line.

Tuesday, February 21, 2017

Making MSTest work from a command line when test artifacts are involved

Last night I was messing around with incorporating code coverage tests into the DissectPECOFFBinary project and ran into an issue with tests passing in VS 2015 but failing at the command line with MSTest. I thankfully found the answer in the blogosphere, kudos to Ryan Burnham and his post Loading External Files for Image Comparison using Specflow and MSTests which showed me the way. Quick summary, add partial classes for your features and tack on DeploymentItem attributes pointing to the files you need to be copied. A fully verbose version is as follows:

- Highlight the binary files, right click on them, and click Properties in the context menu

Set artifacts to copy on build - Set the Copy to Output Directory of the files to Copy Always

Set Copy to Output Directory to Copy Always - Update the solution by adding partial classes for each feature file with attributes

- Update the step files to fall back to the appropriate file path. This has to be done because the copied file will be placed in the root of the folder in the TestResults directory created by MSTest.

Done. MSTest now correctly works from the command line.

May your code compile fully optimized.

May your code compile fully optimized.

Creating an open source project: Automating the build process

AppVeyor provides free automated builds for Open Source projects, and it is truly painless to get started. You just authorize the application to access your GitHub account, create a new AppVeyor project, select your repository, and build! Well, almost that easy. The one thing that I did have to do is add a pre-build script to restore the NuGet packages:

Once that was done, the build functioned and the tests all ran. I even added a build badge to the Read.me file.

[edit] I also ended up having to add a pointer to the solution file in the build settings to get the automated builds on checkin to work correctly:

Next, I am going to get CodeCov integration working. It is based on OpenCover and is another free resource for Open Source projects. I am having some trouble with the command line version of MSTest, so it isn't a tonight thing.

Keep your code clean and covers on!

|

| Add NuGet restore packages command to build |

[edit] I also ended up having to add a pointer to the solution file in the build settings to get the automated builds on checkin to work correctly:

|

| Add solution file to AppVeyor build settings |

Next, I am going to get CodeCov integration working. It is based on OpenCover and is another free resource for Open Source projects. I am having some trouble with the command line version of MSTest, so it isn't a tonight thing.

Keep your code clean and covers on!

Saturday, February 18, 2017

Memory usage in C#: StringBuilder versus String concatenate The RIGHT WAY

Thanks to some wonderful feedback, I am going to re-do my previous article on memory usage. I am leaving the other as a warning and reminder. I have no shame in admitting my mistakes, it is the only way that we grow and learn. ;-)

Anyway, there were several things that were not the best about the previous method I used, but the two core ones I am going to address here are my cavalier handling (or not handling) of exceptions and using Stopwatch and WorkingSet64 to roll my own bench-marking tool.

Let's examine the first one. I knew that the only exception that I was wanting to ignore was the UnauthorizedAccessException, so why was I eating them all? Laziness, pure and simple. I know better! So I fixed that by just catching that exception. Simple.

Then I looked at the BenchmarkDotNet project and cried a little tear of joy. Really! It was small, but it was real. It has more than enough bells and whistles to make me happy. Ok, now how do I implement BenchmarkDotNet? Super easy:

Clean and easy. And the default output is outstanding. It does run 16 trials by default, so I ran it against the following test directory rather than the full drive:

Here are the results as an image, so you can marvel at the pretty colors:

And here is a Gist of the full set of results:

In conclusion, well, the same thing as before. But this time it is more accurate, and actually gives additional information. BenchmarkDotNet is a must have arrow in your quiver. If you show both the previous output and this output to someone, which one do you think will have the greater impact?

Keep your code clean and memory leak free!

Anyway, there were several things that were not the best about the previous method I used, but the two core ones I am going to address here are my cavalier handling (or not handling) of exceptions and using Stopwatch and WorkingSet64 to roll my own bench-marking tool.

Let's examine the first one. I knew that the only exception that I was wanting to ignore was the UnauthorizedAccessException, so why was I eating them all? Laziness, pure and simple. I know better! So I fixed that by just catching that exception. Simple.

Then I looked at the BenchmarkDotNet project and cried a little tear of joy. Really! It was small, but it was real. It has more than enough bells and whistles to make me happy. Ok, now how do I implement BenchmarkDotNet? Super easy:

- Install the NuGet Package by right clicking on the project and clicking Manage NuGet Packages...

Manage NuGet Packages - Click the Browse tab and type in BenchmarkDotNet, choose the top entry and click Install

Install BenchmarkDotNet - Accept the dependencies

Accept the dependencies - And the licenses

Accept the licenses - Wrap the old nast into a new class and add attributes on some new runner methods. It does yell at you a little if you don't run in release mode, so make that change. ;-)

Clean and easy. And the default output is outstanding. It does run 16 trials by default, so I ran it against the following test directory rather than the full drive:

Here are the results as an image, so you can marvel at the pretty colors:

|

| Benchmark Results |

In conclusion, well, the same thing as before. But this time it is more accurate, and actually gives additional information. BenchmarkDotNet is a must have arrow in your quiver. If you show both the previous output and this output to someone, which one do you think will have the greater impact?

Keep your code clean and memory leak free!

Memory usage in C#: StringBuilder versus String concatenate The WRONG WAY

Do not use this as an example of how to do benchmark testing! I am leaving it here as an example of how NOT to do it. Look at the next post for the RIGHT way (or at least more right than this). You have been warned.

For fun I built a program that is a memory hog. I used recursion and appended strings using the '+' operator and built a bunch of objects. My thought was that I would stress out the garbage collector with the recursion and string nastiness. Then I went back and tried the same without the string mess by using StringBuilder. I left the object creation and recursion the same. What do you think happened? Here is the code, and my results follow.

The results are:

ReadAllFilesAttributesAndConcat string length = 117,158,296

ReadAllFilesAttributesAndConcat max memory = 1,756,577,792

Duration =21:56

ReadAllFilesAttributesAndAppend string length = 24,809,733

ReadAllFilesAttributesAndAppend max memory = 720,510,976

Duration =18:53

Second fun run:

ReadAllFilesAttributesAndConcat string length = 131,089,243

ReadAllFilesAttributesAndConcat max memory = 1,755,693,056

Duration =25:37

ReadAllFilesAttributesAndAppend string length = 38,738,692

ReadAllFilesAttributesAndAppend max memory = 795,697,152

Duration =20:53

and ran it again against my D: and the results are as follows:

ReadAllFilesAttributesAndConcat

string length = 13,362,511

max memory = 304,152,576

Duration =01:50

ReadAllFilesAttributesAndAppend

string length = 13,362,511

max memory = 192,352,256

Duration =01:37

In the first runs the case of using '+' to concatenate strings, the max is 1.6 GB, in the case of using StringBuilder it is only .7 GB. In the corrected run we see .3 GB for the first and .2 GB for the second. The file sizes were the same and StringBuilder was faster.

The moral to the story? Test your code! Also, StringBuilder for the win! Other things to note, I should not have run it against the main drive as it introduces variability because of swap files and such. Also, it took way too long for this to run. I am running it on a SSD, and it still took over 40 minutes to finish each run. I might try something smaller than my C: next time, but I wanted to make sure and fully exercise the methods without having to be smart about building a test string generator. That'll learn me. :-) Last, my strings grew to 117 million characters. That is a lot of stuff! Would the memory usage difference have been smaller if I hadn't pushed it with such long strings? Probably. Would the performance difference been smaller? Again, probably.

Keep your code clean!

Thursday, February 16, 2017

Reading Structured Binary files in C#: Part 9

I have added additional tests and checked them into the GitHub repository for Dissect PECOFF Binary, and there are some interesting things that I have learned. The main thing is that each of the sections should have a starting location method. In order to properly test, I need to be able to read the sections separately. At times I will need to know values from previously consumed structs, so I pass those in to the method and let the method sort it out. The next thing I noticed is that there are a ton of properties to test. Seriously, the OptionalHeaderWindowsSpecificPE32 alone has 21. Another thing is that this makes it really easy to spot differences between the files! I just copy the example line and change the file name that I am testing against and any differences show up as errors.

Anyway, I have added a lot of tests, check it out.

Anyway, I have added a lot of tests, check it out.

Tuesday, February 14, 2017

What does MS SQL Server on Linux mean?

I recently took a look at the state of PostgreSQL and C# and reveled in the maturity of the tooling around it. Now, I want to think about what Installing SQL Server on Ubuntu means. For a long time, if I thought of Enterprise, Linux, and Databases together in a single thought the only things that came to mind were Oracle or PostgreSQL. I know that I am doing a disservice to MySQL and a ton of other solid relational databases, but that is what I knew. I won't even mention MongoDB or the other NoSQL databases because they fill a different niche. Now, I have to add MS SQL Server to the mix. What does it look like? How do you manage it? Are there any differences that we need to take into consideration when we program against it? What is the update story? Will it support running SharePoint?!?! Those are questions I hope to answer over the coming weeks as I start yet another series of articles.

Monday, February 13, 2017

Reading Structured Binary files in C#: Part 8

This post is going to follow my addition of SpecFlow tests to the DissectPECOFFBinary program. I added the SpecFlow project and set it up to use MSTest in the previous post, so this will be pure testing. One thing that I left out of the previous post is that you will need to install the IDE integration package as outlined in the Getting Started section of the SpecFlow site. That done, the first thing that you need to do is get your testing artifacts in place. In this case, they are the 22 binary files that we have been examining in the Dissecting C# Executables series. We can add more later, but for now these should be sufficient.

Next, create a Feature file. We will add a feature for each of the parts, that gives us the most flexibility. Here are the steps to add the first one

- Adding a new folder in the project by right clicking on the project and clicking Add and New Folder

Add new folder - Name it TestArtifacts and then drag the executable files into it

Test artifacts

Next, create a Feature file. We will add a feature for each of the parts, that gives us the most flexibility. Here are the steps to add the first one

- Right click on the project and Add a New Item

Add a new item - Choose the SpecFlowFeatureFile and name the file MSDOS20Section.feature

Add the MSDOS20Section.feature file - Fill in the feature file with a Scenario Outline as follows:

- Right click in the feature file and click on Generate Step Definitions

Generate Step Definitions - Accept the defaults (they almost always are the best) and click Generate and then save the generated file

Generate Steps - Open up the new file and fill in the steps with good C# code. Well, first we need to fix the attributes. When the SpecFlow addin generates the attributes it looks for numbers and other parts that it believes to be arguments. For us, we need to adjust the generated code to match this:

- Add a reference to the DissectBECOFFBinary project in the test project

- Update the first step to store the file name in the current context. This is an important concept, your steps need to be independent, but they can assume that certain information is available in the current context.

- Update the second step to read in the MSDOS20Section by...what?

OK, here is where we need to make some decisions. Do we just add a method on the PECOFFBinary class that takes in a file name and reads the given section? Do we add a constructor for the PECOFFBinary class that takes in a file name and then add a method for reading the struct? Do we just open the file and read directly? Since this is the MSDOS20Section feature, we are going to read it directly.

Add the code to open the file and read in the struct. You will need to change the visibility of the struct to public so that it can be used in the test project. There are ways around this, but for now we will just go with it.

Add the structure you read to the current context. - Get the MSDOS20Section from the current context and add an assertion that the expected value matches the found value

Your code should look something like this:

Now run your test! Wait, there is a problem! The test failed. Add a break point on line 36 of the MSDOS20SectionSteps.cs file and debug the test by right clicking on it and choosing Debug Selected Test. Once you do, you can peek at the value the MSDOS20Section.Signature actually has by hovering your mouse over the property. I pinned the value to make it easier to capture as an image:

Where did the \u0090 come from and how do I add it to the test? After a quick Bing search I found that it is a Device Control String character. I played around a bit and found that I could type it in using the ALT+#144 method, but it doesn't show up visually in the feature file. The test passes, but that is not maintainable. Instead, I added an html encoded version to the Signature and used WebUtility.HtmlDecode() to process the incoming signature. That did it! The test passes!

But wait, there is more. We added the OffsetToPEHeader to the Example section, but we didn't have a step to test the value. Ok, we need to add a step. Add a new line to the Scenario Outline, right click in the feature file again and choose Generate Step Definitions again and then Copy methods to clipboard. If you try to Generate again, it will attempt to replace the file you have been working in. By copying it to the clipboard, you are able to paste it in yourself. When you paste in the method, notice that the value comes in as a string. We are going to have to convert it to a uint before we can properly compare. We will just use a Convert.ToUInt16 and test the converted value.

That is our start. Tons more to do, but I won't bore you with the mundane. If something else interesting comes up I will talk about it. I will check in what I have, passing test and all. ;-)

Keep testing!

|

| MSDOS20Section.Signature value within a test run |

But wait, there is more. We added the OffsetToPEHeader to the Example section, but we didn't have a step to test the value. Ok, we need to add a step. Add a new line to the Scenario Outline, right click in the feature file again and choose Generate Step Definitions again and then Copy methods to clipboard. If you try to Generate again, it will attempt to replace the file you have been working in. By copying it to the clipboard, you are able to paste it in yourself. When you paste in the method, notice that the value comes in as a string. We are going to have to convert it to a uint before we can properly compare. We will just use a Convert.ToUInt16 and test the converted value.

That is our start. Tons more to do, but I won't bore you with the mundane. If something else interesting comes up I will talk about it. I will check in what I have, passing test and all. ;-)

Keep testing!

Sunday, February 12, 2017

Reading Structured Binary files in C#: Part 7

What was I thinking? I have stood on the TDD soapbox for years, and I start an Open Source project with no tests? For shame! I have a single method doing two distinct jobs and the whole thing isn't really testable. *sigh* Time to fix the problems. First, let's add some tests!

Add a Test Project

I am a big fan of SpecFlow, even though I don't generally use it as intended. From the FAQ:

Refactor

I mentioned above that there was a method doing too many jobs. Let's fix that first. The method is the DissectFile method, and it opens the file, reads in a struct, and prints the struct. That is three distinct jobs, and having one method do all three keeps this from being a very testable program. How should we fix it?

First, let's pull the part that opens the file out. That way, we can just pass a stream to the dissection method. One job down.

Next we should separate the reading from the writing. Since we have just been reading and then writing, we don't currently have an overarching structure to contain all of the pieces. That won't work any more. I need to build something that will contain the pieces in an order, but some of the pieces are optional. Hrm, this is an interesting problem! Is there anyone still reading? I am going to go into stream of consciousness mode now and try and peel back the covers on my thought process. When I get stuck, I just pick a way and try it out. Don't let yourself get stuck in analysis paralysis trying to find the perfect solution. Keep moving!

I am going to propose a simple, naive data structure in hopes that some of you smart people reading can suggest something better. After a quick Bing search on generic C# data structures...I am going to go with a System.Collections.Generic.LinkedList<T> for now. Things will get strange when I get to the optional nodes because some of them can be in any order, but for now I will start there. Here are my refactoring steps:

Add a Test Project

I am a big fan of SpecFlow, even though I don't generally use it as intended. From the FAQ:

SpecFlow aims to bridge the communication gap between domain experts and developers. Acceptance tests in SpecFlow follow the BDD paradigm of defining specifications with examples, so that they are also understandable to business users. Acceptance tests can then be tested automatically as needed, while their specification serves as a living documentation of the system.The key here is communication. I have used it in the past as an automated acceptance testing tool, and it really shines! But I mostly just use it as a testing framework to help me keep my testing methods atomic. To begin with, let's add a new test project to our solution:

- Right click on your solution and click Add / New Project...

Adding a new project to a solution - Choose a Unit Test Project and name it something snappy, like the name of the base project with .SpecFlow appended to it and click OK

Add a Unit Test Project - Delete the automatically included UnitTest1.cs file (not gonna show it!)

- Add the SpecFlow NuGet package (I work without a net and pull prerelease packages!)

SpecFlow NuGet package - Open the new App.config and add the following line as describe in the Configuration documentation to allow SpecFlow to work with MSTest

<unitTestProvider name="MsTest" />

Refactor

I mentioned above that there was a method doing too many jobs. Let's fix that first. The method is the DissectFile method, and it opens the file, reads in a struct, and prints the struct. That is three distinct jobs, and having one method do all three keeps this from being a very testable program. How should we fix it?

First, let's pull the part that opens the file out. That way, we can just pass a stream to the dissection method. One job down.

Next we should separate the reading from the writing. Since we have just been reading and then writing, we don't currently have an overarching structure to contain all of the pieces. That won't work any more. I need to build something that will contain the pieces in an order, but some of the pieces are optional. Hrm, this is an interesting problem! Is there anyone still reading? I am going to go into stream of consciousness mode now and try and peel back the covers on my thought process. When I get stuck, I just pick a way and try it out. Don't let yourself get stuck in analysis paralysis trying to find the perfect solution. Keep moving!

I am going to propose a simple, naive data structure in hopes that some of you smart people reading can suggest something better. After a quick Bing search on generic C# data structures...I am going to go with a System.Collections.Generic.LinkedList<T> for now. Things will get strange when I get to the optional nodes because some of them can be in any order, but for now I will start there. Here are my refactoring steps:

- Add a new IPECOFFPart interface

- Apply the interface to all of the structs

- Add a new PECOFFBinary class

- Add a Parts LinkedList that contains IPECOFFPart(s)

Wait, that won't work. That is what I should fill once I get the values. Hrm, OK, let me try again:

- Add a new PECOFFBinary class

- Add a static PartTypes LinkedList that contains Type values

- Add a static constructor that adds the parts in the right order

- ...

Ouch, when I get to the 5th part, I have two different parts that it could be, either the PE32 or the PE32+ optional header. For now I will just put them both and keep going. Fine until I get to the SectionTable which I punted on last time. There can be multiples of these, we had three. And that is before I teased them apart into separate structs. Well, for now I will just put in one and hope for inspiration later, perhaps while I sleep. OK, structure (sort of) defined.

Now I can go back and add in the IPECOFFPart interface and the Parts LinkedList and update the DissectFile method read from the PartTypes list and fill the Parts list. So I strip out everything in the method and create two new ones: ReadPECOFFBinary and WritePECOFFBinary. I am just creating them in the Program class for now, but I believe they should move over to the PECOFFBinary class soon. Fill them both with the guts of the using statement and snip out the parts that don't involve the action they are performing.

Wait, found a problem. I had recently extended the printout to put out the starting address of each part just before printing it out. I was using the position in the inputFile, but I will now be reading the whole file and then printing everything out. So, let's go back and add a starting address to each of the structures that can be used when printing them out and update the WriteStartingAddress method to take in the address rather than the stream.

STOP! Too much at once. This isn't refactoring, this is re-writing. Press Control-Z a bunch in the Program.cs file and start over. I like the PECOFFBinary.cs so I will leave it, but the rest needs to be taken a step at a time. This mess is tangled up because I didn't do my testing. Let's get some testing in place before we start messing with the guts of the DissectFile method. As I mentioned, that is going to be another article, and I have rambled on here long enough. ;-) Please keep reading, and comment!

Please try this at home!

Friday, February 10, 2017

Reading Structured Binary files in C#: Part 6

For tonight I just want to dump the sections that we read last time out as binary dumps. I will go back and print out the skipped sections and eventually dissect/decode the sections, but for now I want to just get a feel for the remaining data. To that end, I have added the following code:

to the end of the DissectFile method. The WriteStartingAddress method is as follows:

I have checked in the code to the new repository.

I am also using GitHubGist to store and format the coding examples. In the earlier articles I used Manoli.net's C# Code Format tool and embedded the code within the article. Let me know which one you like better.

May all your code compile cleanly!

to the end of the DissectFile method. The WriteStartingAddress method is as follows:

I have checked in the code to the new repository.

I am also using GitHubGist to store and format the coding examples. In the earlier articles I used Manoli.net's C# Code Format tool and embedded the code within the article. Let me know which one you like better.

May all your code compile cleanly!

Thursday, February 9, 2017

Creating an open source project: Getting Started

I have been working on the DissectPCOFFBinary project for a bit now, and I have gotten to the stage that I want to share it. The best thing to do is to create a GitHub project, so what steps do I need to go through? How do I get started? What are the steps? Here is what I have found:

1: Create a License file, I am using the MIT License template

The MIT License

4: Install the GitHub Desktop to make it easier

5: Create a new repository in GitHub Desktop

6: Add a check-in note and description and Commit to master

7: Publish the changes to GitHub

That is all for now. That is the minimum I feel comfortable with. There are tons of things to add, including a project site and Wiki. I will continue to post about changes I make to the project as I make them.

1: Create a License file, I am using the MIT License template

The MIT License

Copyright (c) 2017 Larry Smithmier

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

From: https://opensource.org/licenses/MIT

2: Create a README.md filePermission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

From: https://opensource.org/licenses/MIT

# Dissect PECOFF Binary # This is the Source Code location for DissectPECOFFBinary application. Please see the [C# For You](http://www.csharp4u.com/search/label/DissectPCOFFBinary) blog for the latest posts on the applicaton. *"Sharing is Caring"* This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/). For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or contact [[email protected]](mailto:[email protected]) with any additional questions or comments.3: Create a CONTRIBUTING.md file

# Contribution guidance *work in progress* This repository contains the code described in [Reading Structured Binary Files in C#]( http://www.csharp4u.com/2017/02/reading-structured-binary-files-in-c.html "C#4U blog post on reading binary files in C#"). Follow the standard Open Source process on getting started and contributing. See the wiki pages from the main repository for additional details. ---

4: Install the GitHub Desktop to make it easier

5: Create a new repository in GitHub Desktop

|

| Create a new GitHub Repository |

|

| Commit changes to master |

|

| Publish the changes to GitHub |

8: Check your repository!

|

| Check your repository |

Keep your code clean!

Reading Structured Binary files in C#: Part 5

The next step is to decode the Section Tables. We get the number of tables from the COFF header, but all of the tables share the same format. We only need to generate one new struct and then use a loop to pull out the sections. Here is the loop:

Here is the struct:

And here is the output of the new portion of the code:

The code is getting longer, so the next post will be about creating an open source project on GitHub. I am going to release it under the MIT license. That way you can try it out yourself, and perhaps help me build something interesting.

Keep coding!

for (int i = 0; i < coffHeader.Value.NumberOfSections; i++) { SectionTable? sectionTable = inputFile. ReadStructure<SectionTable>(); Console.WriteLine( sectionTable.ToString()); sectionTables.Add(sectionTable.Value.Name, sectionTable); }

Here is the struct:

[StructLayout(LayoutKind.Explicit, CharSet = CharSet.Ansi, Pack = 1)]

public struct SectionTable

{

[FieldOffset(0x0)]

[MarshalAs(UnmanagedType.ByValTStr, SizeConst = 0x8)]

public string Name;

[FieldOffset(0x8)]

[MarshalAs(UnmanagedType.U4)]

UInt32 VirtualSize;

[FieldOffset(0xC)]

[MarshalAs(UnmanagedType.U4)]

UInt32 VirtualAddress;

[FieldOffset(0x10)]

[MarshalAs(UnmanagedType.U4)]

UInt32 SizeOfRawData;

[FieldOffset(0x14)]

[MarshalAs(UnmanagedType.U4)]

UInt32 PointerToRawData;

[FieldOffset(0x18)]

[MarshalAs(UnmanagedType.U4)]

UInt32 PointerToRelocations;

[FieldOffset(0x1C)]

[MarshalAs(UnmanagedType.U4)]

UInt32 PointerToLinenumbers;

[FieldOffset(0x20)]

[MarshalAs(UnmanagedType.U2)]

UInt16 NumberOfRelocations;

[FieldOffset(0x22)]

[MarshalAs(UnmanagedType.U2)]

UInt16 NumberOfLinenumbers;

[FieldOffset(0x24)]

[MarshalAs(UnmanagedType.U4)]

UInt32 Characteristics;

public override string ToString()

{

StringBuilder returnValue = new StringBuilder();

returnValue.AppendFormat("Name: {0}", Name);

returnValue.AppendLine();

returnValue.AppendFormat("VirtualSize: {0}", VirtualSize);

returnValue.AppendLine();

returnValue.AppendFormat("VirtualAddress: 0x{0:X}",

VirtualAddress);

returnValue.AppendLine();

returnValue.AppendFormat("SizeOfRawData: {0}", SizeOfRawData);

returnValue.AppendLine();

returnValue.AppendFormat("PointerToRawData: 0x{0:X}",

PointerToRawData);

returnValue.AppendLine();

returnValue.AppendFormat("PointerToRelocations: 0x{0:X}",

PointerToRelocations);

returnValue.AppendLine();

returnValue.AppendFormat("PointerToLinenumbers: 0x{0:X}",

PointerToLinenumbers);

returnValue.AppendLine();

returnValue.AppendFormat("NumberOfRelocations: {0}",

NumberOfRelocations);

returnValue.AppendLine();

returnValue.AppendFormat("NumberOfLinenumbers: {0}",

NumberOfLinenumbers);

returnValue.AppendLine();

returnValue.AppendFormat("Characteristics: 0x{0:X}",

Characteristics);

returnValue.AppendLine();

returnValue.AppendFormat("Characteristics (decoded): {0}",

DecodeCharacteristics(Characteristics));

returnValue.AppendLine();

return returnValue.ToString();

}

private string DecodeCharacteristics(uint characteristics)

{

List<string> setCharacteristics = new List<string>();

if ((characteristics == 0x00000000))

{

// 0x00000000 Reserved for future use.

setCharacteristics.Add("Reserved flag set");

}

if ((characteristics & 0x00000001) != 0)

{

// 0x00000001 Reserved for future use.

setCharacteristics.Add("Reserved flag set");

}

if ((characteristics & 0x00000002) != 0)

{

// 0x00000002 Reserved for future use.

setCharacteristics.Add("Reserved flag set");

}

if ((characteristics & 0x00000004) != 0)

{

// 0x00000004 Reserved for future use.

setCharacteristics.Add("Reserved flag set");

}

if ((characteristics & 0x00000008) != 0)

{

//IMAGE_SCN_TYPE_NO_PAD 0x00000008 The section should not

// be padded to the next boundary.This flag is

// obsolete and is replaced by

// IMAGE_SCN_ALIGN_1BYTES.This is valid only for

// object files.

setCharacteristics.Add("IMAGE_SCN_TYPE_NO_PAD");

}

if ((characteristics & 0x00000010) != 0)

{

// 0x00000010 Reserved for future use.

setCharacteristics.Add("Reserved flag set");

}

if ((characteristics & 0x00000020) != 0)

{

//IMAGE_SCN_CNT_CODE 0x00000020 The section contains

// executable code.

setCharacteristics.Add("IMAGE_SCN_CNT_CODE");

}

if ((characteristics & 0x00000040) != 0)

{

//IMAGE_SCN_CNT_INITIALIZED_DATA 0x00000040 The section

// contains initialized data.

setCharacteristics.Add("IMAGE_SCN_CNT_INITIALIZED_DATA");

}

if ((characteristics & 0x00000080) != 0)

{

//IMAGE_SCN_CNT_UNINITIALIZED_ DATA 0x00000080 The section

// contains uninitialized data.

setCharacteristics.Add("IMAGE_SCN_CNT_UNINITIALIZED_DATA");

}

if ((characteristics & 0x00000100) != 0)

{

//IMAGE_SCN_LNK_OTHER 0x00000100 Reserved for future use.

setCharacteristics.Add("IMAGE_SCN_LNK_OTHER");

}

if ((characteristics & 0x00000200) != 0)

{

//IMAGE_SCN_LNK_INFO 0x00000200 The section contains

// comments or other information.The.drectve

// section has this type.This is valid for object

// files only.

setCharacteristics.Add("IMAGE_SCN_LNK_INFO");

}

if ((characteristics & 0x00000400) != 0)

{

// 0x00000400 Reserved for future use.

setCharacteristics.Add("Reserved flag set");

}

if ((characteristics & 0x00000800) != 0)

{

//IMAGE_SCN_LNK_REMOVE 0x00000800 The section will not

// become part of the image.This is valid only

// for object files.

setCharacteristics.Add("IMAGE_SCN_LNK_REMOVE");

}

if ((characteristics & 0x00001000) != 0)

{

//IMAGE_SCN_LNK_COMDAT 0x00001000 The section contains

// COMDAT data.For more information, see section

// 5.5.6, “COMDAT Sections(Object Only).” This is

// valid only for object files.

setCharacteristics.Add("IMAGE_SCN_LNK_COMDAT");

}

if ((characteristics & 0x00008000) != 0)

{

//IMAGE_SCN_GPREL 0x00008000 The section contains data

// referenced through the global pointer(GP).

setCharacteristics.Add("IMAGE_SCN_GPREL");

}

if ((characteristics & 0x00020000) != 0)

{

//IMAGE_SCN_MEM_PURGEABLE 0x00020000 Reserved for future use.

setCharacteristics.Add("IMAGE_SCN_MEM_PURGEABLE");

}

if ((characteristics & 0x00020000) != 0)

{

//IMAGE_SCN_MEM_16BIT 0x00020000 Reserved for future use.

setCharacteristics.Add("IMAGE_SCN_MEM_16BIT");

}

if ((characteristics & 0x00040000) != 0)

{

//IMAGE_SCN_MEM_LOCKED 0x00040000 Reserved for future use.

setCharacteristics.Add("IMAGE_SCN_MEM_LOCKED");

}

if ((characteristics & 0x00080000) != 0)

{

//IMAGE_SCN_MEM_PRELOAD 0x00080000 Reserved for future

// use.

setCharacteristics.Add("IMAGE_SCN_MEM_PRELOAD");

}

if ((characteristics & 0x00100000) != 0)

{

//IMAGE_SCN_ALIGN_1BYTES 0x00100000 Align data on a 1 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_1BYTES");

}

if ((characteristics & 0x00200000) != 0)

{

//IMAGE_SCN_ALIGN_2BYTES 0x00200000 Align data on a 2 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_2BYTES");

}

if ((characteristics & 0x00300000) != 0)

{

//IMAGE_SCN_ALIGN_4BYTES 0x00300000 Align data on a 4 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_4BYTES");

}

if ((characteristics & 0x00400000) != 0)

{

//IMAGE_SCN_ALIGN_8BYTES 0x00400000 Align data on an 8 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_8BYTES");

}

if ((characteristics & 0x00500000) != 0)

{

//IMAGE_SCN_ALIGN_16BYTES 0x00500000 Align data on a 16 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_16BYTES");

}

if ((characteristics & 0x00600000) != 0)

{

//IMAGE_SCN_ALIGN_32BYTES 0x00600000 Align data on a 32 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_32BYTES");

}

if ((characteristics & 0x00700000) != 0)

{

//IMAGE_SCN_ALIGN_64BYTES 0x00700000 Align data on a 64 -

// byte boundary.Valid only for object files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_64BYTES");

}

if ((characteristics & 0x00800000) != 0)

{

//IMAGE_SCN_ALIGN_128BYTES 0x00800000 Align data on a

// 128 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_128BYTES");

}

if ((characteristics & 0x00900000) != 0)

{

//IMAGE_SCN_ALIGN_256BYTES 0x00900000 Align data on a

// 256 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_256BYTES");

}

if ((characteristics & 0x00A00000) != 0)

{

//IMAGE_SCN_ALIGN_512BYTES 0x00A00000 Align data on a

// 512 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_512BYTES");

}

if ((characteristics & 0x00B00000) != 0)

{

//IMAGE_SCN_ALIGN_1024BYTES 0x00B00000 Align data on a

// 1024 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_1024BYTES");

}

if ((characteristics & 0x00C00000) != 0)

{

//IMAGE_SCN_ALIGN_2048BYTES 0x00C00000 Align data on a

// 2048 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_2048BYTES");

}

if ((characteristics & 0x00D00000) != 0)

{

//IMAGE_SCN_ALIGN_4096BYTES 0x00D00000 Align data on a

// 4096 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_4096BYTES");

}

if ((characteristics & 0x00E00000) != 0)

{

//IMAGE_SCN_ALIGN_8192BYTES 0x00E00000 Align data on an

// 8192 - byte boundary.Valid only for object

// files.

setCharacteristics.Add("IMAGE_SCN_ALIGN_8192BYTES");

}

if ((characteristics & 0x01000000) != 0)

{

//IMAGE_SCN_LNK_NRELOC_OVFL 0x01000000 The section

// contains extended relocations.

setCharacteristics.Add("IMAGE_SCN_LNK_NRELOC_OVFL");

}

if ((characteristics & 0x02000000) != 0)

{

// IMAGE_SCN_MEM_DISCARDABLE 0x02000000 The section can

// be discarded as needed.

setCharacteristics.Add("IMAGE_SCN_MEM_DISCARDABLE");

}

if ((characteristics & 0x04000000) != 0)

{

// IMAGE_SCN_MEM_NOT_CACHED 0x04000000 The section

// cannot be cached.

setCharacteristics.Add("IMAGE_SCN_MEM_NOT_CACHED");

}

if ((characteristics & 0x08000000) != 0)

{

// IMAGE_SCN_MEM_NOT_PAGED 0x08000000 The section is not

// pageable.

setCharacteristics.Add("IMAGE_SCN_MEM_NOT_PAGED");

}

if ((characteristics & 0x10000000) != 0)

{

// IMAGE_SCN_MEM_SHARED 0x10000000 The section can be

// shared in memory.

setCharacteristics.Add("IMAGE_SCN_MEM_SHARED");

}

if ((characteristics & 0x20000000) != 0)

{

// IMAGE_SCN_MEM_EXECUTE 0x20000000 The section can be

// executed as code.

setCharacteristics.Add("IMAGE_SCN_MEM_EXECUTE");

}

if ((characteristics & 0x40000000) != 0)

{

// IMAGE_SCN_MEM_READ 0x40000000 The section can be read.

setCharacteristics.Add("IMAGE_SCN_MEM_READ");

}

if ((characteristics & 0x80000000) != 0)

{

// IMAGE_SCN_MEM_WRITE 0x80000000 The section can be

// written to.

setCharacteristics.Add("IMAGE_SCN_MEM_WRITE");

}

return string.Join(",", setCharacteristics);

}

}

And here is the output of the new portion of the code:

Name: .text VirtualSize: 948 VirtualAddress: 0x2000 SizeOfRawData: 1024 PointerToRawData: 0x200 PointerToRelocations: 0x0 PointerToLinenumbers: 0x0 NumberOfRelocations: 0 NumberOfLinenumbers: 0 Characteristics: 0x60000020 Characteristics (decoded): IMAGE_SCN_CNT_CODE,IMAGE_SCN_MEM_EXECUTE,IMAGE_SCN_ME M_READ Name: .rsrc VirtualSize: 720 VirtualAddress: 0x4000 SizeOfRawData: 1024 PointerToRawData: 0x600 PointerToRelocations: 0x0 PointerToLinenumbers: 0x0 NumberOfRelocations: 0 NumberOfLinenumbers: 0 Characteristics: 0x40000040 Characteristics (decoded): IMAGE_SCN_CNT_INITIALIZED_DATA,IMAGE_SCN_MEM_READ Name: .reloc VirtualSize: 12 VirtualAddress: 0x6000 SizeOfRawData: 512 PointerToRawData: 0xA00 PointerToRelocations: 0x0 PointerToLinenumbers: 0x0 NumberOfRelocations: 0 NumberOfLinenumbers: 0 Characteristics: 0x42000040 Characteristics (decoded): IMAGE_SCN_CNT_INITIALIZED_DATA,IMAGE_SCN_MEM_DISCARDA BLE,IMAGE_SCN_MEM_READ Press return to exit

The code is getting longer, so the next post will be about creating an open source project on GitHub. I am going to release it under the MIT license. That way you can try it out yourself, and perhaps help me build something interesting.

Keep coding!

Tuesday, February 7, 2017

Reading Structured Binary files in C#: Part 4

Now the fun begins. The next section is the COFF Optional Header which is variable in size. We need the value from the previous section to get the size, but we also need the first portion (the Magic Number talked about in Dissecting C# Executables: Part 4) to determine what type of image it is, a PE32 or a PE32+. We could just pull the Magic number and then switch, but both Optional Header variants start with the same Standard Fields, so let's leverage that and just pull them in. Here is the struct for the COFF Optional Header standard fields:

Once we read them in, we can determine if we need to pull in the BaseOfCode and which sizes and offsets to use for the Windows-Specific fields. I am going to cheat a little bit and stash the BaseOfCode with the PE32 Windows-Specific fields rather than pulling it in separately. The only problem with pulling these fields out separately is that the offsets in the struct won't match up with nicely with the documentation. It isn't a big deal really, but anything that adds cognitive overhead is to be avoided when possible. Wait though, I am already deviating pretty heavily because I am giving my offsets in hex rather than decimal. Nevermind.

The struct for the PE32 Windows-Specific fields section is quite long, so I have included it as a separate page. I built a PE32+ Windows-Specific struct, but will leave it out here since we aren't going to be using it for any of our examples. The output now is an impressive length and is:

This is looking good so far. Almost caught up with the Dissection articles. We should sync up by the end of the week and I will run them in parallel after that.

Keep your code clean!

[StructLayout(LayoutKind.Explicit, CharSet = CharSet.Ansi, Pack = 1)]

public struct COFFOptionalHeaderStandardFields

{

[FieldOffset(0x0)]

[MarshalAs(UnmanagedType.U2)]

public UInt16 Magic;

[FieldOffset(0x2)]

[MarshalAs(UnmanagedType.U1)]

byte MajorLinkerVersion;

[FieldOffset(0x3)]

[MarshalAs(UnmanagedType.U1)]

byte MinorLinkerVersion;

[FieldOffset(0x4)]

[MarshalAs(UnmanagedType.U4)]

UInt32 SizeOfCode;

[FieldOffset(0x8)]

[MarshalAs(UnmanagedType.U4)]

UInt32 SizeOfInitializedData;

[FieldOffset(0xC)]

[MarshalAs(UnmanagedType.U4)]

UInt32 SizeOfUninitializedData;

[FieldOffset(0x10)]

[MarshalAs(UnmanagedType.U4)]

UInt32 AddressOfEntryPoint;

[FieldOffset(0x14)]

[MarshalAs(UnmanagedType.U4)]

UInt32 BaseOfCode;

public override string ToString()

{

StringBuilder returnValue = new StringBuilder();

returnValue.AppendFormat("Magic: 0x{0:X}", Magic);

returnValue.AppendLine();

returnValue.AppendFormat("Magic (decoded): {0}",

DecodeMagic(Magic));

returnValue.AppendLine();

returnValue.AppendFormat("MajorLinkerVersion: {0}",

MajorLinkerVersion);

returnValue.AppendLine();

returnValue.AppendFormat("MinorLinkerVersion: {0}",

MinorLinkerVersion);

returnValue.AppendLine();

returnValue.AppendFormat("SizeOfCode: {0}", SizeOfCode);

returnValue.AppendLine();

returnValue.AppendFormat("SizeOfInitializedData: {0}",

SizeOfInitializedData);

returnValue.AppendLine();

returnValue.AppendFormat("SizeOfUninitializedData: {0}",

SizeOfUninitializedData);

returnValue.AppendLine();

returnValue.AppendFormat("AddressOfEntryPoint: 0x{0:X}",

AddressOfEntryPoint);

returnValue.AppendLine();

returnValue.AppendFormat("BaseOfCode: 0x{0:X}", BaseOfCode);

returnValue.AppendLine();

return returnValue.ToString();

}

private string DecodeMagic(ushort magic)

{

string returnValue = string.Empty;

switch (magic)

{

case 0x10b:

returnValue = "PE32";

break;

case 0x20b:

returnValue = "PE32+";

break;

default:

returnValue = "Undefined";

break;

}

return returnValue;

}

}

Once we read them in, we can determine if we need to pull in the BaseOfCode and which sizes and offsets to use for the Windows-Specific fields. I am going to cheat a little bit and stash the BaseOfCode with the PE32 Windows-Specific fields rather than pulling it in separately. The only problem with pulling these fields out separately is that the offsets in the struct won't match up with nicely with the documentation. It isn't a big deal really, but anything that adds cognitive overhead is to be avoided when possible. Wait though, I am already deviating pretty heavily because I am giving my offsets in hex rather than decimal. Nevermind.

The struct for the PE32 Windows-Specific fields section is quite long, so I have included it as a separate page. I built a PE32+ Windows-Specific struct, but will leave it out here since we aren't going to be using it for any of our examples. The output now is an impressive length and is:

Signature: MZ? OffsetToPEHeader: 80 PE Signature: PE MachineType: 0x14C MachineType (decoded): IMAGE_FILE_MACHINE_I386 NumberOfSections: 3 TimeDateStamp: 0x58475109 PointerToSymbolTable: 0x0 NumberOfSymbols: 0 SizeOfOptionalHeader: 224 Characteristics: 0x102 Characteristics (decoded): IMAGE_FILE_EXECUTABLE_IMAGE,IMAGE_FILE_32BIT_MACHINE Magic: 0x10B Magic (decoded): PE32 MajorLinkerVersion: 8 MinorLinkerVersion: 0 SizeOfCode: 1024 SizeOfInitializedData: 1536 SizeOfUninitializedData: 0 AddressOfEntryPoint: 0x23AE BaseOfCode: 0x2000 BaseOfData: 0x4000 ImageBase: 0x400000 SectionAlignment: 0x2000 FileAlignment: 0x200 MajorOperatingSystemVersion: 4 MinorOperatingSystemVersion: 0 MajorImageVersion: 0 MinorImageVersion: 0 MajorSubsystemVersion: 4 MinorSubsystemVersion: 0 Win32VersionValue: 0 SizeOfImage: 32768 SizeOfHeaders: 512 CheckSum: 0x0 Subsystem: 0x3 Subsystem (decoded): IMAGE_SUBSYSTEM_WINDOWS_CUI DllCharacteristics: 0x8540 DllCharacteristics (decoded): IMAGE_DLLCHARACTERISTICS_DYNAMIC_BASE,IMAGE_DLLCHA RACTERISTICS_NX_COMPAT,IMAGE_DLLCHARACTERISTICS_ NO_SEH,IMAGE_DLLCHARACTERISTICS _TERMINAL_SERVER_AWARE SizeOfStackReserve: 1048576 SizeOfStackCommit: 4096 SizeOfHeapReserve: 1048576 SizeOfHeapCommit: 4096 LoaderFlags: 0x0 NumberOfRvaAndSizes: 0x10 Press return to exit

This is looking good so far. Almost caught up with the Dissection articles. We should sync up by the end of the week and I will run them in parallel after that.

Keep your code clean!

Monday, February 6, 2017

Reading Structured Binary files in C#: Part 3

Just before the COFF Header we have the PE Signature which is short, but since we are reading and showing every section we need to start there. Here is the struct:

And with it in place our output becomes:

The COFF Header contains the Machine Type and the Characteristics which need to be decoded to properly display. That means adding some methods to decode them. Here is the struct for the COFF Header:

And here is the output once it is in place:

Since this post is already so code heavy, here is the Program class for the console application:

A lot of progress! More to come. Keep coding!

[StructLayout(LayoutKind.Explicit, CharSet = CharSet.Ansi, Pack = 1)]

public struct PESignature

{

[FieldOffset(0x0)]

[MarshalAs(UnmanagedType.ByValTStr, SizeConst = 0x4)]

public string Signature;

public override string ToString()

{

StringBuilder returnValue = new StringBuilder();

returnValue.AppendFormat("PE Signature: {0}", Signature);

returnValue.AppendLine();

return returnValue.ToString();

}

}

And with it in place our output becomes:

Signature: MZ? OffsetToPEHeader: 80 PE Signature: PE Press return to exit

The COFF Header contains the Machine Type and the Characteristics which need to be decoded to properly display. That means adding some methods to decode them. Here is the struct for the COFF Header:

[StructLayout(LayoutKind.Explicit, CharSet = CharSet.Ansi, Pack = 1)]

public struct COFFHeader

{

[FieldOffset(0x0)]

[MarshalAs(UnmanagedType.U2)]

UInt16 MachineType;

[FieldOffset(0x2)]

[MarshalAs(UnmanagedType.U2)]

UInt16 NumberOfSections;

[FieldOffset(0x4)]

[MarshalAs(UnmanagedType.U4)]

UInt32 TimeDateStamp;

[FieldOffset(0x8)]

[MarshalAs(UnmanagedType.U4)]

UInt32 PointerToSymbolTable;

[FieldOffset(0xC)]

[MarshalAs(UnmanagedType.U4)]

UInt32 NumberOfSymbols;

[FieldOffset(0x10)]

[MarshalAs(UnmanagedType.U2)]

UInt16 SizeOfOptionalHeader;

[FieldOffset(0x12)]

[MarshalAs(UnmanagedType.U2)]

UInt16 Characteristics;

public override string ToString()

{

StringBuilder returnValue = new StringBuilder();

returnValue.AppendFormat("MachineType: 0x{0:X}", MachineType);

returnValue.AppendLine();

returnValue.AppendFormat("MachineType (decoded): {0}",

DecodeMachineType(MachineType));

returnValue.AppendLine();

returnValue.AppendFormat("NumberOfSections: {0}",

NumberOfSections);

returnValue.AppendLine();

returnValue.AppendFormat("TimeDateStamp: 0x{0:X}", TimeDateStamp);

returnValue.AppendLine();

returnValue.AppendFormat("PointerToSymbolTable: 0x{0:X}",

PointerToSymbolTable);

returnValue.AppendLine();

returnValue.AppendFormat("NumberOfSymbols: {0}",

NumberOfSymbols);

returnValue.AppendLine();

returnValue.AppendFormat("SizeOfOptionalHeader: {0}",

SizeOfOptionalHeader);

returnValue.AppendLine();

returnValue.AppendFormat("Characteristics: 0x{0:X}",

Characteristics);

returnValue.AppendLine();

returnValue.AppendFormat("Characteristics (decoded): {0}",

DecodeCharacteristics(Characteristics));

returnValue.AppendLine();

return returnValue.ToString();

}

public string DecodeMachineType(UInt16 machineType)

{

string returnValue;

switch (machineType)

{

case 0x0:

//IMAGE_FILE_MACHINE_UNKNOWN 0x0 The contents of this

// field are assumed to be

// applicable to any

// machine type

returnValue = "IMAGE_FILE_MACHINE_UNKNOWN";

break;

case 0x1d3:

//IMAGE_FILE_MACHINE_AM33 0x1d3 Matsushita AM33

returnValue = "IMAGE_FILE_MACHINE_AM33";

break;

case 0x8664:

//IMAGE_FILE_MACHINE_AMD64 0x8664 x64

returnValue = "IMAGE_FILE_MACHINE_AMD64";

break;

case 0x1c0:

//IMAGE_FILE_MACHINE_ARM 0x1c0 ARM little endian

returnValue = "IMAGE_FILE_MACHINE_ARM";

break;

case 0xaa64:

//IMAGE_FILE_MACHINE_ARM64 0xaa64 ARM64 little endian

returnValue = "IMAGE_FILE_MACHINE_ARM64";

break;

case 0x1c4:

//IMAGE_FILE_MACHINE_ARMNT 0x1c4 ARM Thumb-2 little

// endian

returnValue = "IMAGE_FILE_MACHINE_ARMNT";

break;

case 0xebc:

//IMAGE_FILE_MACHINE_EBC 0xebc EFI byte code

returnValue = "IMAGE_FILE_MACHINE_EBC";

break;

case 0x14c:

//IMAGE_FILE_MACHINE_I386 0x14c Intel 386 or later

// processors and

// compatible processors

returnValue = "IMAGE_FILE_MACHINE_I386";

break;

case 0x200:

//IMAGE_FILE_MACHINE_IA64 0x200 Intel Itanium processor

// family

returnValue = "IMAGE_FILE_MACHINE_IA64";

break;

case 0x9041:

//IMAGE_FILE_MACHINE_M32R 0x9041 Mitsubishi M32R little

// endian

returnValue = "IMAGE_FILE_MACHINE_M32R";

break;

case 0x266:

//IMAGE_FILE_MACHINE_MIPS16 0x266 MIPS16

returnValue = "IMAGE_FILE_MACHINE_MIPS16";

break;

case 0x366:

//IMAGE_FILE_MACHINE_MIPSFPU 0x366 MIPS with FPU

returnValue = "IMAGE_FILE_MACHINE_MIPSFPU";

break;

case 0x466:

//IMAGE_FILE_MACHINE_MIPSFPU16 0x466 MIPS16 with FPU

returnValue = "IMAGE_FILE_MACHINE_MIPSFPU16";

break;

case 0x1f0:

//IMAGE_FILE_MACHINE_POWERPC 0x1f0 Power PC little

// endian

returnValue = "IMAGE_FILE_MACHINE_POWERPC";

break;

case 0x1f1:

//IMAGE_FILE_MACHINE_POWERPCFP 0x1f1 Power PC with

// floating point support

returnValue = "IMAGE_FILE_MACHINE_POWERPCFP";

break;

case 0x166:

//IMAGE_FILE_MACHINE_R4000 0x166 MIPS little endian

returnValue = "IMAGE_FILE_MACHINE_R4000";

break;

case 0x5032:

//IMAGE_FILE_MACHINE_RISCV32 0x5032 RISC - V 32 - bit

// address space

returnValue = "IMAGE_FILE_MACHINE_RISCV32";

break;

case 0x5064:

//IMAGE_FILE_MACHINE_RISCV64 0x5064 RISC - V 64 - bit

// address space

returnValue = "IMAGE_FILE_MACHINE_RISCV64";

break;

case 0x5128:

//IMAGE_FILE_MACHINE_RISCV128 0x5128 RISC - V 128 - bit

// address space

returnValue = "IMAGE_FILE_MACHINE_RISCV128";

break;

case 0x1a2:

//IMAGE_FILE_MACHINE_SH3 0x1a2 Hitachi SH3

returnValue = "IMAGE_FILE_MACHINE_SH3";

break;

case 0x1a3:

//IMAGE_FILE_MACHINE_SH3DSP 0x1a3 Hitachi SH3 DSP

returnValue = "IMAGE_FILE_MACHINE_SH3DSP";

break;

case 0x1a6:

//IMAGE_FILE_MACHINE_SH4 0x1a6 Hitachi SH4

returnValue = "IMAGE_FILE_MACHINE_SH4";

break;

case 0x1a8:

//IMAGE_FILE_MACHINE_SH5 0x1a8 Hitachi SH5

returnValue = "IMAGE_FILE_MACHINE_SH5";

break;

case 0x1c2:

//IMAGE_FILE_MACHINE_THUMB 0x1c2 Thumb

returnValue = "IMAGE_FILE_MACHINE_THUMB";

break;

case 0x169:

//IMAGE_FILE_MACHINE_WCEMIPSV2 0x169 MIPS little

// -endian WCE v2

returnValue = "IMAGE_FILE_MACHINE_WCEMIPSV2";

break;

default:

returnValue = "Unknown Machine Type";

break;

}

return returnValue;

}

private string DecodeCharacteristics(ushort characteristics)

{

List<string> setCharacteristics = new List<string>();

if((characteristics & 0x0001) != 0)

{

//IMAGE_FILE_RELOCS_STRIPPED 0x0001 Image only, Windows CE,

// and Microsoft Windows NT®

// and later. This indicates

// that the file does not

// contain base relocations

// and must therefore be loaded

// at its preferred base

// address.If the base address

// is not available, the loader

// reports an error.The default

// behavior of the linker is

// to strip base relocations

// from executable(EXE) files.

setCharacteristics.Add("IMAGE_FILE_RELOCS_STRIPPED");

}

if ((characteristics & 0x0002) != 0)

{

//IMAGE_FILE_EXECUTABLE_IMAGE 0x0002 Image only. This

// indicates that the image

// file is valid and can be run.

// If this flag is not set, it

// indicates a linker error.

setCharacteristics.Add("IMAGE_FILE_EXECUTABLE_IMAGE");

}

if ((characteristics & 0x0004) != 0)

{

//IMAGE_FILE_LINE_NUMS_STRIPPED 0x0004 COFF line numbers

// have been removed. This

// flag is deprecated and

// should be zero.

setCharacteristics.Add("IMAGE_FILE_LINE_NUMS_STRIPPED");

}

if ((characteristics & 0x0008) != 0)

{

//IMAGE_FILE_LOCAL_SYMS_STRIPPED 0x0008 COFF symbol table

// entries for local symbols

// have been removed. This flag

// is deprecated and should

// be zero.

setCharacteristics.Add("IMAGE_FILE_LOCAL_SYMS_STRIPPED");

}

if ((characteristics & 0x0010) != 0)

{

//IMAGE_FILE_AGGRESSIVE_WS_TRIM 0x0010 Obsolete.

// Aggressively trim working

// set. This flag is deprecated

// for Windows 2000 and later

// and must be zero.

setCharacteristics.Add("IMAGE_FILE_AGGRESSIVE_WS_TRIM");

}

if ((characteristics & 0x0020) != 0)

{

//IMAGE_FILE_LARGE_ADDRESS_ AWARE 0x0020 Application can

// handle > 2 GB addresses.

setCharacteristics.Add("IMAGE_FILE_LARGE_ADDRESS_AWARE");

}

if ((characteristics & 0x0040) != 0)

{

//RESERVED 0x0040 This flag is reserved for future use.

setCharacteristics.Add("RESERVED");

}

if ((characteristics & 0x0080) != 0)

{

//IMAGE_FILE_BYTES_REVERSED_LO 0x0080 Little endian: the

// least significant bit(LSB)

// precedes the most significant

// bit(MSB) in memory.This flag

// is deprecated and should be

// zero.

setCharacteristics.Add("IMAGE_FILE_BYTES_REVERSED_LO");

}

if ((characteristics & 0x0100) != 0)

{

//IMAGE_FILE_32BIT_MACHINE 0x0100 Machine is based on a

// 32 - bit - word architecture.

setCharacteristics.Add("IMAGE_FILE_32BIT_MACHINE");

}

if ((characteristics & 0x0200) != 0)

{

//IMAGE_FILE_DEBUG_STRIPPED 0x0200 Debugging information is

// removed from the image file.

setCharacteristics.Add("IMAGE_FILE_DEBUG_STRIPPED");

}

if ((characteristics & 0x0400) != 0)

{

//IMAGE_FILE_REMOVABLE_RUN_ FROM_SWAP 0x0400 If the image is

// on removable media, fully

// load it and copy it to the

// swap file.

setCharacteristics.Add("IMAGE_FILE_REMOVABLE_RUN_FROM_SWAP");

}

if ((characteristics & 0x0800) != 0)

{

//IMAGE_FILE_NET_RUN_FROM_SWAP 0x0800 If the image is on

// network media, fully load it

// and copy it to the

// swap file.

setCharacteristics.Add("IMAGE_FILE_NET_RUN_FROM_SWAP");

}

if ((characteristics & 0x1000) != 0)

{

//IMAGE_FILE_SYSTEM 0x1000 The image file is a system file,

// not a user program.

setCharacteristics.Add("IMAGE_FILE_SYSTEM");

}

if ((characteristics & 0x2000) != 0)

{

//IMAGE_FILE_DLL 0x2000 The image file is a dynamic - link

// library(DLL). Such files are

// considered executable files

// for almost all purposes,

// although they cannot be

// directly run.

setCharacteristics.Add("IMAGE_FILE_DLL");

}

if ((characteristics & 0x4000) != 0)

{

//IMAGE_FILE_UP_SYSTEM_ONLY 0x4000 The file should be run

// only on a uniprocessor

// machine.

setCharacteristics.Add("IMAGE_FILE_UP_SYSTEM_ONLY");

}

if ((characteristics & 0x8000) != 0)

{

//IMAGE_FILE_BYTES_REVERSED_HI 0x8000 Big endian: the MSB

// precedes the LSB in memory.

// This flag is deprecated and

// should be zero.

setCharacteristics.Add("IMAGE_FILE_BYTES_REVERSED_HI");

}

return string.Join(",", setCharacteristics);

}

}

}

And here is the output once it is in place:

Signature: MZ? OffsetToPEHeader: 80 PE Signature: PE MachineType: 0x14C MachineType (decoded): IMAGE_FILE_MACHINE_I386 NumberOfSections: 3 TimeDateStamp: 0x58475109 PointerToSymbolTable: 0x0 NumberOfSymbols: 0 SizeOfOptionalHeader: 224 Characteristics: 0x102 Characteristics (decoded): IMAGE_FILE_EXECUTABLE_IMAGE,IMAGE_FILE_32BIT_MACHINE Press return to exit

Since this post is already so code heavy, here is the Program class for the console application:

class Program { static void Main(string[] args) { var options = new Options(); if (CommandLine.Parser.Default.ParseArguments(args, options)) { if(!string.IsNullOrEmpty(options.FileName) && File.Exists(options.FileName)) { DissectFile(options.FileName); } else { Console.WriteLine(options.GetUsage()); } } if (options.ShowingHelp) { return; } Console.WriteLine("Press return to exit"); Console.ReadLine(); } private static void DissectFile(string fileName) { using(FileStream inputFile = File.OpenRead(fileName)) { MSDOS20Section? msdos20Section = inputFile.ReadStructure<MSDOS20Section>(); Console.WriteLine(msdos20Section.ToString()); PESignature? peSignature = inputFile.ReadStructure<PESignature>(); Console.WriteLine(peSignature.ToString()); COFFHeader? coffHeader = inputFile.ReadStructure<COFFHeader>(); Console.WriteLine(coffHeader.ToString()); } } }

A lot of progress! More to come. Keep coding!

Subscribe to:

Posts (Atom)